When introducing my passion for data science, I’d always bring up A/B testing.

And people would ask: why A/B tests?

The Problem with Gut Feelings in Product Development#

Picture this: you’re in a meeting room, and the highest-paid person announces their brilliant idea for improving user engagement. Everyone nods in agreement. Six months later, the “improvement” has actually hurt the product’s performance.

Sound familiar?

At least from my own experience, I see this scenario almost every day.

This scenario plays out countless times across the tech industry because we consistently overestimate our ability to predict what users want. As a data scientist, I’ve witnessed firsthand how even seasoned product managers and designers—brilliant people with years of experience—can be spectacularly wrong about what users actually want. There even exists real-world data supporting this argument,

Only one third of the ideas tested at Microsoft improved the metric(s) they were designed to improve.

—Ron Kohavi, Trustworthy Online Controlled Experiments

The sad truth is that human intuition, while valuable for generating hypotheses, is remarkably poor at both predicting and assessing the actual value of product changes—even tech giants with world-class talent get it wrong most of the time. Without controlled experimentation, we’re essentially flying blind, making decisions based on opinions rather than evidence.

(Source: unsplash)

A/B Testing: The Scientific Method for Product Development#

A/B testing transforms product development from guesswork into science. By randomly splitting users into control and treatment groups, we can isolate the causal impact of specific changes on user behavior (measured with business metrics). This isn’t just about getting numbers—it’s about getting numbers you can trust.

During my time as a game analyst at Rayark, a mobile game development company in Taiwan, I used A/B testing to optimize our game tutorial experience, directly improving next-day retention rates. The beauty of controlled experiments lies in their ability to cut through the noise of external factors and reveal genuine cause-and-effect relationships.

Unlike correlation-based analysis, which can be misleading due to confounding variables, A/B testing provides the statistical rigor needed to make confident product decisions. This scientific approach enables teams to iterate rapidly, fail fast when ideas don’t work, and scale successful innovations with confidence.

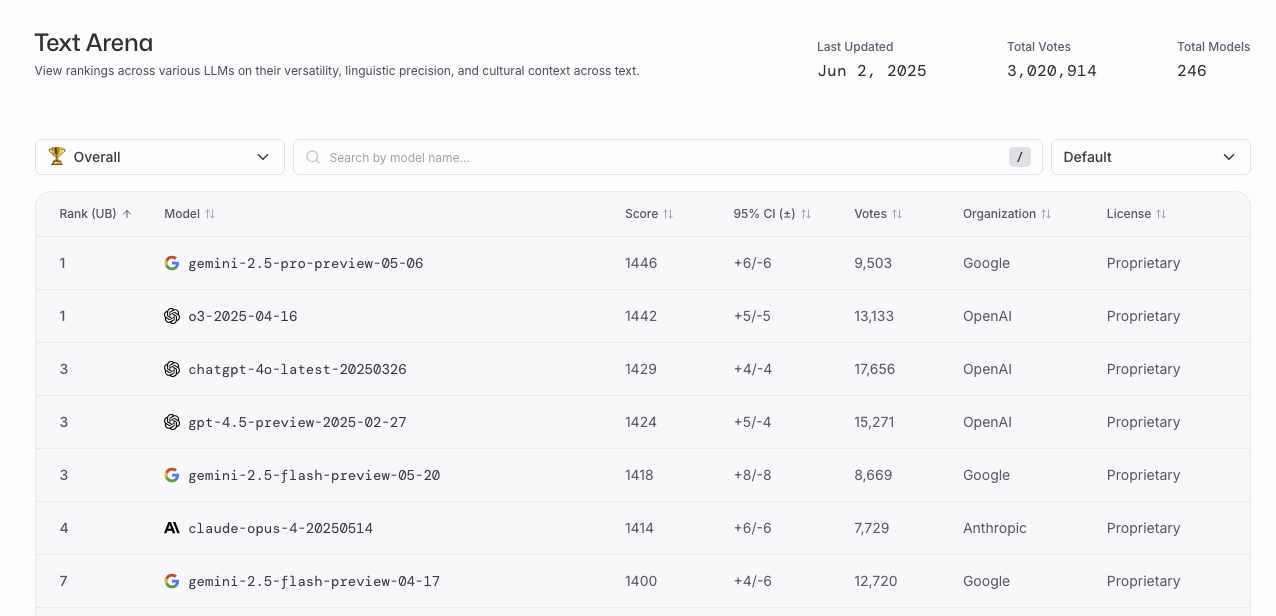

A/B Testing is Non-Negotiable#

In an era of fierce digital competition and rapidly evolving technology, the ability to quickly distinguish what works from what doesn’t determines survival. Even in the age of AI, controlled experimentation remains essential. Consider Chatbot Arena (lmarena.ai), where different AI models compete in randomized controlled trials to determine actual performance rather than relying on theoretical benchmarks (or often-used offline metrics in research papers). This fact that AI models also need A/B tests to generate reliable leaderboards demonstrates a crucial principle:

whether you’re testing a new feature, an AI model, or a redesigned UI component, empirical validation trumps assumptions every time.

A/B testing isn’t just a nice-to-have analytics tool—it’s fundamental business infrastructure. Companies can now run hundreds of concurrent experiments, testing everything from button colors to algorithm changes, gathering insights that would have been impossible to obtain just decades ago. Moreover, A/B testing serves as crucial risk management, allowing organizations to test changes on small user segments before full rollout, preventing potentially disastrous decisions from affecting the entire user base. The most successful digital companies—from Google to Netflix to Booking.com—have built experimentation into their DNA, treating every product change as a hypothesis to be tested rather than an assumption to be trusted.

In today’s data-driven landscape, making product decisions without causal studies or controlled experiments isn’t just risky—it’s negligent.

Must Read for A/B Testing: Trustworthy Online Controlled Experiments (Amazon affiliate link)